Member-only story

Data Science: Implementing a Naive Bayesian Classifier using the Empirical Density.

This story is part of my Data Science series.

In my previous story (see here), I have provided an example implementation for a random forest based on a classification problem stemming from this set of data.

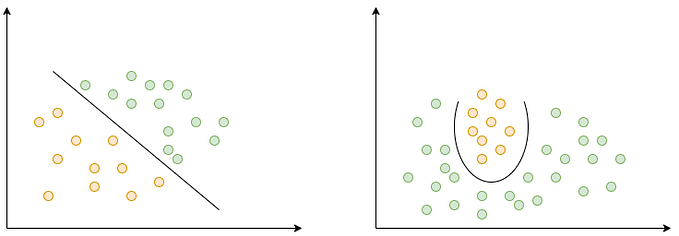

As one further approach for solving this problem I want to use a Naive Bayes classifier which implementation shall be the focus of this story.

In general, since the data set is quite large, the Naive Bayes approach is a good choice despite of its rather hard assumptions imposed on the data. You may find some more details on that in the theoretic outline here.

As a quick reminder, the Naive Bayes is based on the following relation of conditional probabilities:

P(C | X) = P(C) / P(X) * P(X | C)where C is the categorical outcome random variable and X the feature random variable.

As we see, in order to find an estimate for the outcome to be 1 based on the condition the feature to take value…